Software Testing

We Mean Testing

Enhancing customer's quality by exploring testing thoughts

Test Framework:

- Processes, Standards, Checklists, Guidelines and Templates

- Re-usability of the Test Artifacts

- Test case reviews and review effectiveness measurement

- Efficiency in the functional testing

Approach:

- Risk based testing approach

- Optimization of test cycles

- Metrics Management at project and program level

- Metrics based dash boards

- Tools for metrics data capture

- Seamless integration between defect management and test cases

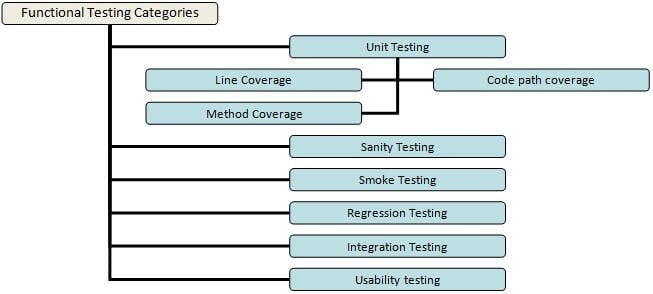

Functional Testing : A Detailed Guide

In the simplest words, functional testing checks an application, website, or system to ensure that it is doing exactly what it is meant to.

Functional testing is the process through which QAs determine if a piece of software is acting in accordance with pre-determined requirements. It uses black-box testing techniques, in which the tester has no knowledge of the internal system logic.

Functional testing is only concerned with validating if a system works as intended.

-

Unit Testing: This is performed by developers who write scripts that test if individual components/units of an application match the requirements. This usually involves writing tests that call the methods in each unit and validate them when they return values that match the requirements.

-

In unit testing, code coverage is mandatory. Ensure that test cases exist to cover the following:

- Line coverage

- Code path coverage

- Method coverage

-

In unit testing, code coverage is mandatory. Ensure that test cases exist to cover the following:

- Smoke Testing: This is done after the release of each build to ensure that software stability is intact and not facing any anomalies.

- Sanity Testing: Usually done after smoke testing, this is run to verify that every major functionality of an application is working perfectly, both by itself and in combination with other elements.

- Regression Testing: This test ensures that changes to the codebase (new code, debugging strategies, etc.) do not disrupt the already existing functions or trigger some instability.

- Integration Testing: If a system requires multiple functional modules to work effectively, integration testing is done to ensure that individual modules work as expected when operating in combination with each other. It validates that the end-to-end outcome of the system meets these necessary standards.

- Beta/ Usability Testing: In this stage, actual customers test the product in a production environment. This stage is necessary to gauge how comfortable a customer is with the interface. Their feedback is taken for implementing further improvements to the code.

What is Automation Testing?

As the name suggests. automation testing takes software testing activities and executes them via an automation toolset or framework. In simple words, it is a type of testing in which a tool executes a set of tasks in a defined pattern automatically.

It takes the pressure off manual testers, and allows them to focus on higher-value tasks - exploratory tests, reviewing test results, etc.

Essentially, a machine takes over and implements, mundane, repetitive, time-confusing tasks such as regression tests. Automation testing is essential to achieving greater test coverage within shorter timelines, as well as greater accuracy of results.

How to move from Manual to Automation Testing

To begin with: ask two questions.

1. What to Automate?

2. How to Automate?

What To Automate?

- Automate tests necessary for a frequent release cycle. That may include smoke tests, regression tests, and the like. Basically, automate tests that speed up the entire test cycle. Remember, lower manual intervention equals faster results.

- Automate tests based on technical and business priority. Think like this: would the automation of this test help the business? Would automate this test help simplify technical complexities? If the answer is yes to either question, automate.

- Automate based on usability. Some tests, simply work better when performed manually. Tool dependency can also limit automation potential for a certain testing team or organization.

How To Automate?

To start with, keep in mind that not all tests can be automated. By adhering to the three strategies in the previous section, it becomes easier to initiate automated testing in a practical manner.

- Start small. 100% automation is not the goal, and also not possible. Write smaller test cases because they are easier to maintain and reuse. Move a small number of tests to an automation platform, run the tests, analyze the results and decide if the process proved to be beneficial to the software development process.

- If significant benefits do come from the operation, move more tests to automation. Start moving tests not only in volume but also increase the type of tests being automated. Remember that this is still an experimental stage. It is possible that certain tests will prove to be inefficient in automation, and have to be moved back to manual testing.

- Map test cases with each method or function in order to gain better test coverage. Label your test cases for easier identification, so the team can quickly know which tests to automate. This also helps implement better reporting.

- When starting automation testing start by exploring new areas of the application manually. Then, create a risk plan that detail what needs to be automated, based on business and technical priorities.

- Use analytics to determine end-user preferences. Create a list of browsers and devices that users are most likely to access the software with. This helps automation testing cover the right interfaces and optimizes software performance on the right user avenues.

Performance Testing

Performance Testing is a software testing process used for testing the speed, response time, stability, reliability, scalability and resource usage of a software application under particular workload. The main purpose of performance testing is to identify and eliminate the performance bottlenecks in the software application. It is a subset of performance engineering and also known as "Perf Testing".

The focus of Performance Testing is checking a software program's:

- Speed - Determines whether the application responds quickly

- Scalability - Determines maximum user load the software application can handle.

- Stability - Determines if the application is stable under varying loads

Why to do Performance Testing?

- Features and Functionality supported by a software system is not the only concern. A software application's performance like its response time, reliability, resource usage and scalability do matter. The goal of Performance Testing is not to find bugs but to eliminate performance bottlenecks.

- Performance Testing is done to provide stakeholders with information about their application regarding speed, stability, and scalability. More importantly, Performance Testing uncovers what needs to be improved before the product goes to market. Without Performance Testing, software is likely to suffer from issues such as: running slow while several users use it simultaneously, inconsistencies across different operating systems and poor usability.

- Performance testing will determine whether their software meets speed, scalability and stability requirements under expected workloads. Applications sent to market with poor performance metrics due to nonexistent or poor performance testing are likely to gain a bad reputation and fail to meet expected sales goals.

- Also, mission-critical applications like space launch programs or life-saving medical equipment should be performance tested to ensure that they run for a long period without deviations.

Types of Performance Testing

- Load testing - checks the application's ability to perform under anticipated user loads. The objective is to identify performance bottlenecks before the software application goes live.

- Stress testing - involves testing an application under extreme workloads to see how it handles high traffic or data processing. The objective is to identify the breaking point of an application.

- Endurance testing - is done to make sure the software can handle the expected load over a long period of time.

- Spike testing - tests the software's reaction to sudden large spikes in the load generated by users.

- Volume testing - Under Volume Testing large no. of. Data is populated in a database and the overall software system's behavior is monitored. The objective is to check software application's performance under varying database volumes.

- Scalability testing - The objective of scalability testing is to determine the software application's effectiveness in "scaling up" to support an increase in user load. It helps plan capacity addition to your software system.

Accessibility Testing

Accessibility Testing is defined as a type of Software Testing performed to ensure that the application being tested is usable by people with disabilities like hearing, color blindness, old age and other disadvantaged groups.

People with disabilities use assistive technology which helps them in operating a software product. Examples of such software are:

- Speech Recognition Software: It will convert the spoken word to text , which serves as input to the computer.

- Screen reader software: Used to read out the text that is displayed on the screen

- Screen Magnification Software: Used to enlarge the monitor and make reading easy for vision-impaired users.

- Special keyboard: Made for the users for easy typing who have motor control difficulties

Web accessibility Testing Tools

| Product | Vendor | URL |

| AccVerify | HiSoftware | http://www.hisoftware.com |

| Bobby | Watchfire | http://www.watchfire.com |

| WebXM | Watchfire | http://www.watchfire.com |

| Ramp Ascend | Deque | http://www.deque.com |

| InFocus | SSB Technologies | http://www.ssbtechnologies.com/ |